What is Infrastructure as Code (IaC)?

Infrastructure as Code (IaC) is provisioning infrastructure through software for consistent and predictable deployments.

Core Concepts

Infrastructure as Code (IaC) is:

- Defined in Code

Code being integral part configuration is defined through code written in JSON, YAML or HCL (Hashicorp Configuration Language) - Versioned

Since the infrastructure definition is done in code, so we should treat it as code too by storing them on a version control system tool like git or Github so that multiple developers can work and create infrastructure together - More Declarative than Imperative

Imperative way is more procedural and Declarative is more configurable.

Imperative style is mostly giving instructions whereas Declarative is more about providing configurations in JSON, YAML or HashiCorp Language

Imperative vs Declarative - simple example

Let's say we want to make a cheeseburger using software:

- Idempotent & Consistent

Idempotent - if the state matches where the ecosystem desires to be and if someone applies the change again it won't be applied because it sees that its in the state which it is supposed to be, so it is not needed to perform changes.

Consistency - factor that every time the same change is applied, it results in the same state - Push or Pull

Either the configuration is pushed to the target ecosystem or pulled by the target ecosystem

Importance of Terraform in IaC

What is Terraform?

Terraform is an open-source and vendor-agnostic Infrastructure automation tool developed by HashiCorp to programmatically provision resources which an application needs. It is written in GoLang and follows declarative syntax using Hashicorp Configuration Language (HCL) or JSON.

Being open-source means its supported by a big developer community apart from Hashicorp and being vendor-agnostic means its open the possibilities of using multiple cloud providers which best suit ones need.

It uses push deployment strategy thus eliminating the need of agents in your infra. In pre-terraform era, agents were deployed on instances to listen to multiple events and act on it. As your infrastructure grew the sys-admins were spending time maintaining these agents and thus causing what we can say as agent-fatigue.

Architecture & Core Components

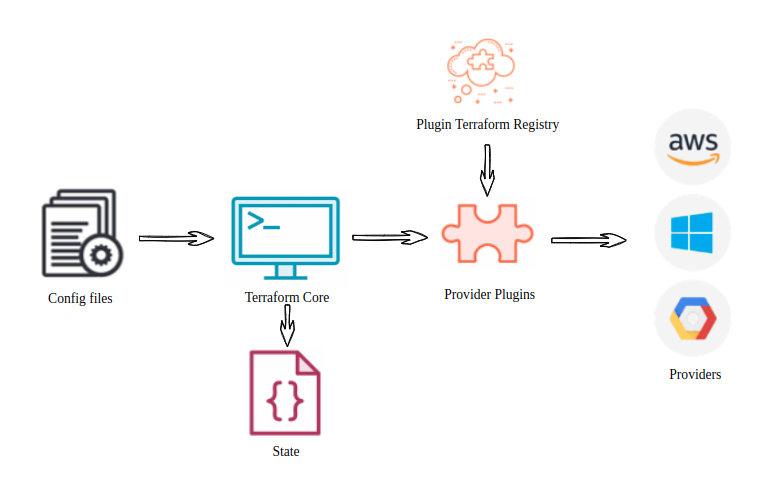

Architecture

Core Components

Terraform Core - going by its name it does most of the heavy lifting when it comes to reading the configuration, writing or maintaining the state and talking to provider plugins

Config Files - The *.tf files are used to define the configuration in HCL or JSON or YAML. HCL is similar to JSON and easy to read

Provider Plugins - The plugins are used for communicating with the providers. If the provider plugin is not available locally it will be downloaded from the public plugin repository

State - maintained by Terraform to track the state of the provisioned infrastructure. This is important information as its needed by the core to evaluate the changes to be applied and come up with the plan.

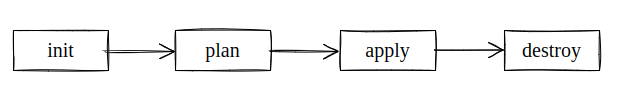

Lifecycle

init: Terraform needs provider plugins. This command looks for file with configuration and checks if it needs provider plugins and modules to be downloaded, if needed it downloads from public terraform registry unless another location is provided. It also needs to store the state data of the configuration so it also initialises the state database in the current working directory if a state database is not provided

plan: This is a optional step. Terraform checks the difference between the current configuration and the state data to come up with a plan which it wants to execute. It prints out the plan and one can also save the plan as a file and provide this file as an input to apply command.

apply: Terraform will apply the plan and execute the changes using provider plugins. The resources will be created or modified in the target environment and state data will be updated to reflect the changes. If we apply the plan again without making any changes it will not be applied and say there are no changes to make thanks to idempotent nature.

destroy: Terraform will destroy everything in the target environment based on what is in state data.

Data Types and Variables

Data Types

Like any programming language, Terraform also supports all the typical data types.

Primitives - String, Number, Boolean

Collections - List, Set, Map

Structural - Tuple, Object

Variables

Variables are fundamental constructs in programming language adding more dynamic behaviour to the language. Its used to store temporary values so that they can assist programming logic in simple as well as complex programs.

For the purpose of clarity and better understanding I would want you to think of the complete Terraform configuration as a single function. One can think of these variables as arguments, return values and local variables of the function we want to define.

Input

Input variables are the function arguments. They are used to pass certain values from outside of the configuration or module. Input variables are similar to local variables. They are used to assign dynamic values to resource attributes. They allow us to pass values before the code execution. The main function of the input variables is to act as inputs to modules. Input variables declared within modules are used to accept values from the root directory.

Note: Modules are self-contained pieces of Terraform code that perform certain predefined deployment tasks.

We can set attributes while declaring input variables. The list of attributes are as below

type — identifies the type of the variable being declared

default — default value if the value is not provided explicitly

description — a description of the variable. This is used to generate documentation for the module

validation — to define validation rules

sensitive — a boolean. If set to true, Terraform masks the variable’s value anywhere it displays the value

Local

Local variables are declared using the local blocks which are only accessible within the module/configuration where they are declared. It is a group of key-value pairs that can be used in the configuration. The values can be hard-coded or be a reference to another variable or resource.

Output

Output variables, are output to a module. One can relate it to as return types of a function. The output variables is a way to communicate between the modules or child module and root.

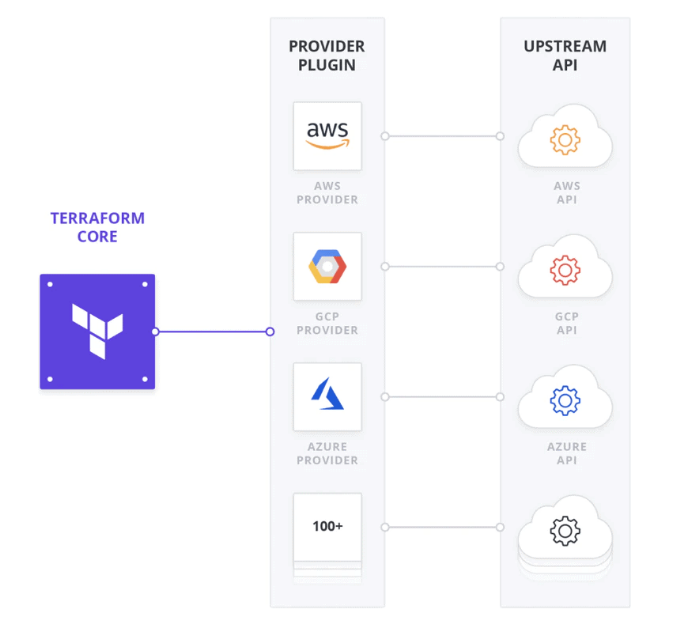

Provider

Terraform is primarily used as a tool to provision infrastructure on cloud platforms like AWS, GCP, etc. Providers are the plugins which help to provision infrastructure on a particular cloud platforms of which the provider is being configured. Every supported service has a provider that defines which resources are available and performs API calls to manage those resources.

Here is a diagram illustrating that concept

Providers are considered to be superpower of Terraform. They are available in both public and private registries with Official, Verified and Community version.

Official version is developed and maintained by Hashicorp.

Verified version is developed by third-party and verified by Hashicorp.

Community version is developed and maintained by community and not verified by Hashicorp.

Module

Terraform module is configuration which we create with input, resources and output. Modules enable reuse of configuration. They can be sourced from local or even remote registries and are versioned. The init part of the terraform life-cycle downloads the required modules to working directory

Components of a module are input variables, local and output variables and all of them are optional.

When there is a need to communicate between parent and child modules the only way is to pass values through input variables. Parent modules don't have access to input, resources and local variables of child modules and it is the same in vice-versa.

Resources

Resources form the important part when defining infrastructure and each resource describes one or more infrastructure objects such as virtual networks, compute instances, etc. Most Terraform providers have a number of different resources that map to the appropriate APIs to manage that particular infrastructure type.

Resource definitions and properties vary depending on the type of resource and the provider. The provider's documentation generally has full list of available resources and their configuration options.

Apply the concepts in real world

Installation

Installing terraform is quite simple and well documented on Hashicorp. I followed the documentation at Install Terraform and chose the instructions based on the OS one is using.

We can verify the installation by running the command

terraform -version

terraform -help

##This command can be used to look at the manual of various commands providedUsage pattern of the terraform command

terraform [global options] <subcommand> [args]

Deploying the first configuration

With the basic knowledge acquired on Terraform till now, we will try to apply the concepts for which we will do a small assignment. In this, we will deploy a web application using nginx in a VPC on AWS and access it over internet

First we will create a directory name first_web_app and create a file main.tf file in which we add all our necessary configurations before applying it to aws.

To achieve this, we will break down the task into smaller pieces and then execute it. Lets list them down as below

1. Choose a provider - we have zeroed in on Amazon Web Services

provider "aws" {

access_key = "ACCESS_KEY"

secret_key = "SECRET_KEY"

region = "us-east-1"

}Caution: Never hard code your credentials in the config. Here we are doing it for an example and in real implementation never follow this

2. Configure Data

This data parameter is a datasource and the type is aws_ssm_parameter. The parameter name is path to linux image which is used while creation of the instance

data "aws_ssm_parameter" "ami" {

name = "/aws/service/ami-amazon-linux-latest/amzn2-ami-hvm-x86_64-gp2"

}3. Configure Networking

resource "aws_vpc" "vpc" {

cidr_block = "10.0.0.0/16"

enable_dns_hostnames = true

}

resource "aws_internet_gateway" "igw" {

vpc_id = aws_vpc.vpc.id

}

resource "aws_subnet" "subnet_aws" {

cidr_block = "10.0.0.0/24"

vpc_id = aws_vpc.vpc.id

map_public_ip_on_launch = true

}4. Configure Routing table

resource "aws_route_table" "rtb" {

vpc_id = aws_vpc.vpc.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.igw.id

}

}

resource "aws_route_table_association" "rta-subnet_one" {

subnet_id = aws_subnet.subnet_aws.id

route_table_id = aws_route_table.rtb.id

}5. Configure Security Groups

resource "aws_security_group" "nginx-security-group" {

name = "nginx_security_group"

vpc_id = aws_vpc.vpc.id

# HTTP access from anywhere

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

# outbound internet access

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}6. Creating instances

resource "aws_instance" "nginx_web_app" {

ami = nonsensitive(data.aws_ssm_parameter.ami.value)

instance_type = "t2.micro"

subnet_id = aws_subnet.subnet_one.id

vpc_security_group_ids = [aws_security_group.nginx-security-group.id]

user_data = <<EOF

#! /bin/bash

sudo amazon-linux-extras install -y nginx1

sudo service nginx start

sudo rm /usr/share/nginx/html/index.html

echo '<html><head><title>QIMAone Blog Server</title></head><body style=\"background-color:#1F778D\"><p style=\"text-align: center;\"><span style=\"color:#FFFFFF;\"><span style=\"font-size:28px;\">My first server deployed using Terraform! Have a 🌮</span></span></p></body></html>' | sudo tee /usr/share/nginx/html/index.html

EOF

}Deploy the configuration

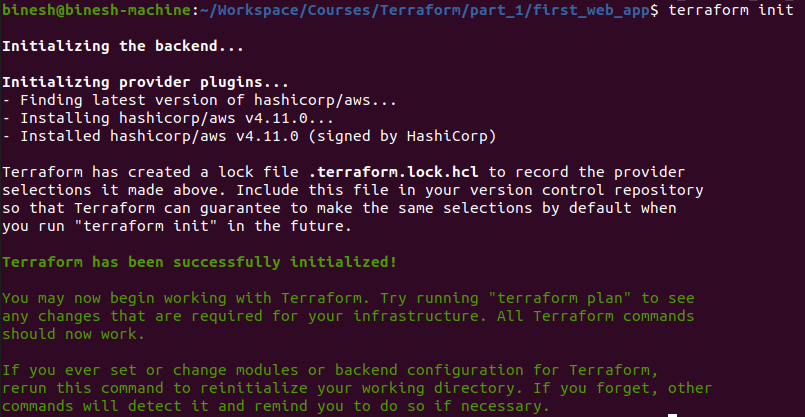

Init

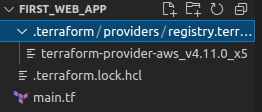

After adding all the configuration in main.tf we will initialize terraform by executing the command terraform init. This initialises and download the aws provider plugin and creates the lock file too.

Note: adding everything in one file is only for the sake of demo

This screenshot shows the .lock file and the downloaded plugin executable

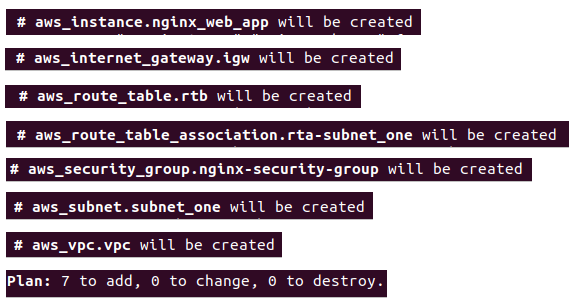

Plan

Now we will execute the terraform plan command and it will tell us about the resources its going to create in our environment

The command executed is

terraform plan -out first_web_app.tfplanThis will save the plan to first_web_app.tfplan.

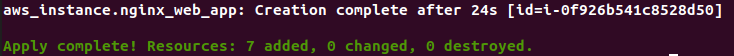

Apply

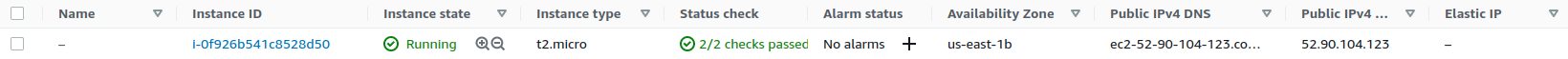

Now, the final step where we will apply the configuration and create the instance.

The command is

terraform apply “first_web_app.tfplan”This is how it completes and we can see that the web-app has been created and 7 resources has been added

Destroy

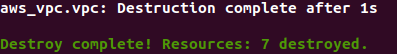

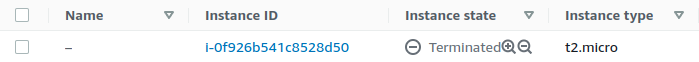

The command executed is

terraform destroy

Conclusion

This is the first part of the terraform starter article and will be followed by more articles with advanced concepts so do keep returning to this page.

💟 Thank you!!

We at QIMA use terraform to manage our infrastructure and we’re hiring!!

If you have any suggestion or remark, please share them with us. We are always keen to discuss and learn!

Written by Binesh, Software Engineer at QIMA